1 Introducing the .NET Framework with C#

The .NET Framework is such a comprehensive platform that it can be a little difficult to describe. I have heard it described as a Development Platform, an Execution Environment, and an Operating System among other things. In fact, in some ways each of these descriptions is accurate, if not sufficiently precise.

The software industry has become much more complex since the introduction of the Internet. Users have become both more sophisticated and less sophisticated at the same time. (I suspect not many individual users have undergone both metamorphoses but as a body of users this has certainly happened). Folks who had never touched a computer less than five years ago are now comfortably including the Internet in their daily lives. Meanwhile, the technophile or professional computer user has become much more advanced, as have their expectations from software.

It is this collective expectation from software that drives our industry. Each time a software developer creates a successful new idea, they raise user expectations for the next new feature. In a way this has been true for years. But now software developers face the added challenge of addressing the Internet and Internet-users in many applications that in the past were largely unconnected. It is this new challenge that the .NET Framework directly addresses.

Popular Posts

-

In this section we are going to implement insert data, delete data, and update data using with JDBC database and also using of JavaScript. ...

Saturday, March 14, 2009

1.1 Code in a Highly Distributed World

1.1 Code in a Highly Distributed World

Software that addresses the Internet must be able to communicate. However, the Internet is not just about communication. This assumption has led the software industry down the wrong path in the past. Communication is simply the base requirement for software in an Inter-networked world.

In addition to communication other features must be established. These include, security, binary composeability and modularity (which I will discuss shortly), scalability and performance, and flexibility. Even these just scratch the surface, but they are a good start.

Here are some features that users will expect in the near future. Users will begin to expect to run code served by a server that is not limited to the abilities (or physical display window) of a browser. Users will begin to expect websites and server-side code to begin to compose themselves of data and functionality from various venders, giving the end-user flexible one-stop shopping. Users will expect their data and information to be both secured and to roam from site to site so that they don’t have to type it in over and again. These are tall orders, and these are the types of requirements that are addressed by the .NET Framework.

It is not possible for the requirements of the future to be addressed by a new programming language, or a new library of tools and reusable code. It is also not practical to require everyone to buy a new operating system to use that addresses the Internet directly. This is why the .NET Framework is a development environment, execution environment and Operating System.

One challenge for software in a highly distributed environment (like the Internet) is the fact that many components are involved, with different needs in terms of technology. For example, client software such as a browser or custom client has different needs then a server object or data-base element. Developers creating large systems often have to learn a variety of programming environments and languages just to create a single product.

Figure 1‑1 Internet Distributed Software

Take a look at Figure 1‑1. This depicts a typical arrangement of computers and software in a distributed application. This includes client/server communication on several tiers as well as peer-to-peer communication. In the past the tools that you used to develop code at each tier would likely be different, including different programming languages and code libraries.

The .NET Framework can be used to develop software logic at every point from one end to the other. This way you get to use the language and programming tools that you are comfortable with for each stage of the development process. Additionally, the .NET framework uses standards so that it is not necessary that each piece of the puzzle be implemented using the framework. These are the goals of the .NET Framework.

I will describe what all this means in detail shortly.

Software that addresses the Internet must be able to communicate. However, the Internet is not just about communication. This assumption has led the software industry down the wrong path in the past. Communication is simply the base requirement for software in an Inter-networked world.

In addition to communication other features must be established. These include, security, binary composeability and modularity (which I will discuss shortly), scalability and performance, and flexibility. Even these just scratch the surface, but they are a good start.

Here are some features that users will expect in the near future. Users will begin to expect to run code served by a server that is not limited to the abilities (or physical display window) of a browser. Users will begin to expect websites and server-side code to begin to compose themselves of data and functionality from various venders, giving the end-user flexible one-stop shopping. Users will expect their data and information to be both secured and to roam from site to site so that they don’t have to type it in over and again. These are tall orders, and these are the types of requirements that are addressed by the .NET Framework.

It is not possible for the requirements of the future to be addressed by a new programming language, or a new library of tools and reusable code. It is also not practical to require everyone to buy a new operating system to use that addresses the Internet directly. This is why the .NET Framework is a development environment, execution environment and Operating System.

One challenge for software in a highly distributed environment (like the Internet) is the fact that many components are involved, with different needs in terms of technology. For example, client software such as a browser or custom client has different needs then a server object or data-base element. Developers creating large systems often have to learn a variety of programming environments and languages just to create a single product.

Figure 1‑1 Internet Distributed Software

Take a look at Figure 1‑1. This depicts a typical arrangement of computers and software in a distributed application. This includes client/server communication on several tiers as well as peer-to-peer communication. In the past the tools that you used to develop code at each tier would likely be different, including different programming languages and code libraries.

The .NET Framework can be used to develop software logic at every point from one end to the other. This way you get to use the language and programming tools that you are comfortable with for each stage of the development process. Additionally, the .NET framework uses standards so that it is not necessary that each piece of the puzzle be implemented using the framework. These are the goals of the .NET Framework.

I will describe what all this means in detail shortly.

1.2 C#: A First Taste of Managed Code

1.2 C#: A First Taste of Managed Code

Software that is written using the .NET Framework is called Managed Code. (Legacy or traditional software is sometimes referred to as Unmanaged Code). I will define managed code later in this tutorial. But for now you should think of managed code as code that runs with the aid of an execution engine to promote the goals of Internet software. These goals include security, robustness, and object-oriented design, amongst others. Managed code is not interpreted, and does run in the native machine language of the host processor, but I am getting ahead of myself.

First things first, I would like to show a couple of examples of managed code written using the C# (pronounced see-sharp) language.

class App{

public static void Main(){

System.Console.WriteLine("Hello World!");

}

}

Figure 1‑2 HelloConsole.cs

This short application is written using the C# language. The C# language is just one of the many languages that can be used to write managed code. This source code generates a program that displays the string “Hello World!” to the command line and then exits.

using System.Windows.Forms;

using System.Drawing;

class MyForm:Form{

public static void Main(){

Application.Run(new MyForm());

}

protected override void OnPaint(PaintEventArgs e){

e.Graphics.DrawString("Hello World!", new Font("Arial", 35),

Brushes.Blue, 10, 100);

}

}

Figure 1‑3 HelloGUI.cs

This slightly longer source sample is the GUI or windowed version of the Hello World! application. It takes advantage of a few more of the features of the .NET Framework to create a window in which to draw the message string.

Both Figure 1‑2 and Figure 1‑3 are examples of complete C# applications. One of the goals of the .NET Framework is to increase developer productivity and flexibility. One important way to do this is to make software easier to write.

As you can see, the syntax of C# is an object oriented C-based syntax much like C++ or Java. This allows developers to build on previous experience when targeting the .NET Framework with their software.

Before moving on I would like to point out some very simple details to jumpstart your exposure to C#. First, C# source code is typically maintained in files with a .cs extension. Note that both Figure 1‑2 and Figure 1‑3 are labeled with .cs names indicating that they are complete, compileable C# modules.

Second, if you are writing an executable your application must define an entry point function. (Modules containing nothing but reusable components do not require an entry point, but can not be executed as stand-alone applications). With C# the entry point, if there is one, is always a static method named Main().

The Main() function can accept an array of strings as command line arguments, and it can also return an integer value. The class in which the Main() method is defined can be of any name. It is common to name this class App, but as you can see from Figure 1‑3, it is also ok to use another class name such as MyForm. When reading C# source code, look to the Main() method as a starting point.

Exercise 1‑1 Compile Sample Code

1. The source code in Figure 1‑2 is a complete C# application.

2. Type in the code or copy it from the source code distributed with this tutorial. Save it into a file with the .cs extension.

3. Use either Visual Studio .NET or the command line compiler (named CSC.exe) to compile the application into an executable.

1. Hint: If you are using the command line compiler, the following line would compile a single module into an executable.

csc /target:exe HelloConsole.cs

2. Hint: If you are using the Visual Studio IDE then you should create an empty C# project, and add your .cs file to the project. Then build it to compile the exe.

4. Run the executable.

5. Try modifying the source code a little to change the text of the string, or perhaps to print several lines to the console window.

Exercise 1‑2 Compile GUI Sample Code

1. The source code in Figure 1‑3 is a complete GUI application written in C#. It will display a window with some text on the Window.

2. Like you did in Exercise 1‑1 type in or copy the source code and save it in a .cs file.

3. Compile the source code using the command line compiler or the Visual Studio IDE.

1. Hint: If you are using Visual Studio you will need to add references for System.Drawing.dll, System.Windows.Forms.dll, and System.dll.

4. Run the new executable.

Exercise 1‑3 EXTRA CREDIT: Modify the GUI Application

1. Starting with the project from Exercise 1‑2 you will make modifications to the GUI application.

2. Make modifications to the code to draw text more than once on the window in various locations. Perhaps change the color of the text or the strings printed.

3. Consider using a for or while loop to algorithmically adjust the location of drawing the text to the screen.

Software that is written using the .NET Framework is called Managed Code. (Legacy or traditional software is sometimes referred to as Unmanaged Code). I will define managed code later in this tutorial. But for now you should think of managed code as code that runs with the aid of an execution engine to promote the goals of Internet software. These goals include security, robustness, and object-oriented design, amongst others. Managed code is not interpreted, and does run in the native machine language of the host processor, but I am getting ahead of myself.

First things first, I would like to show a couple of examples of managed code written using the C# (pronounced see-sharp) language.

class App{

public static void Main(){

System.Console.WriteLine("Hello World!");

}

}

Figure 1‑2 HelloConsole.cs

This short application is written using the C# language. The C# language is just one of the many languages that can be used to write managed code. This source code generates a program that displays the string “Hello World!” to the command line and then exits.

using System.Windows.Forms;

using System.Drawing;

class MyForm:Form{

public static void Main(){

Application.Run(new MyForm());

}

protected override void OnPaint(PaintEventArgs e){

e.Graphics.DrawString("Hello World!", new Font("Arial", 35),

Brushes.Blue, 10, 100);

}

}

Figure 1‑3 HelloGUI.cs

This slightly longer source sample is the GUI or windowed version of the Hello World! application. It takes advantage of a few more of the features of the .NET Framework to create a window in which to draw the message string.

Both Figure 1‑2 and Figure 1‑3 are examples of complete C# applications. One of the goals of the .NET Framework is to increase developer productivity and flexibility. One important way to do this is to make software easier to write.

As you can see, the syntax of C# is an object oriented C-based syntax much like C++ or Java. This allows developers to build on previous experience when targeting the .NET Framework with their software.

Before moving on I would like to point out some very simple details to jumpstart your exposure to C#. First, C# source code is typically maintained in files with a .cs extension. Note that both Figure 1‑2 and Figure 1‑3 are labeled with .cs names indicating that they are complete, compileable C# modules.

Second, if you are writing an executable your application must define an entry point function. (Modules containing nothing but reusable components do not require an entry point, but can not be executed as stand-alone applications). With C# the entry point, if there is one, is always a static method named Main().

The Main() function can accept an array of strings as command line arguments, and it can also return an integer value. The class in which the Main() method is defined can be of any name. It is common to name this class App, but as you can see from Figure 1‑3, it is also ok to use another class name such as MyForm. When reading C# source code, look to the Main() method as a starting point.

Exercise 1‑1 Compile Sample Code

1. The source code in Figure 1‑2 is a complete C# application.

2. Type in the code or copy it from the source code distributed with this tutorial. Save it into a file with the .cs extension.

3. Use either Visual Studio .NET or the command line compiler (named CSC.exe) to compile the application into an executable.

1. Hint: If you are using the command line compiler, the following line would compile a single module into an executable.

csc /target:exe HelloConsole.cs

2. Hint: If you are using the Visual Studio IDE then you should create an empty C# project, and add your .cs file to the project. Then build it to compile the exe.

4. Run the executable.

5. Try modifying the source code a little to change the text of the string, or perhaps to print several lines to the console window.

Exercise 1‑2 Compile GUI Sample Code

1. The source code in Figure 1‑3 is a complete GUI application written in C#. It will display a window with some text on the Window.

2. Like you did in Exercise 1‑1 type in or copy the source code and save it in a .cs file.

3. Compile the source code using the command line compiler or the Visual Studio IDE.

1. Hint: If you are using Visual Studio you will need to add references for System.Drawing.dll, System.Windows.Forms.dll, and System.dll.

4. Run the new executable.

Exercise 1‑3 EXTRA CREDIT: Modify the GUI Application

1. Starting with the project from Exercise 1‑2 you will make modifications to the GUI application.

2. Make modifications to the code to draw text more than once on the window in various locations. Perhaps change the color of the text or the strings printed.

3. Consider using a for or while loop to algorithmically adjust the location of drawing the text to the screen.

2 Managed Code and the CLR

2 Managed Code and the CLR

Remember that managed code is code written for (and with) the .NET Framework. Managed code is called managed because it runs under the constant supervision of an execution engine called the Common Language Runtime or CLR.

Figure 2‑1 Managed Code and the CLR

The CLR is similar to other existing execution engines such as the Java VM or Visual Basic. The CLR supplies memory management, type safety, code verifiability, thread management and security. However, the CLR also bears some important distinctions from previously existing environments.

First and foremost, managed code is never interpreted. This statement is so important that it is worth repeating. Unlike with other execution engines, code that runs under the supervision of the CLR runs natively in the machine language of the host CPU. I will touch on this in more detail shortly.

Secondly, the security model enforced by the CLR on managed code is different than the security of previous environments. Managed code does not run in a virtual machine, and it does not run in a sand-box. However, the Common Language Runtime does apply restrictions to managed code based on where the code comes from. Managed security is flexible and powerful feature. An entire tutorial in this series is devoted to this topic.

Before beginning to describe what and how the CLR does what it does, I would like to take a moment to address the need for managed code. Managed code makes it possible for applications to be composed of parts that they were never tested with, and that may not even have existed at the time of development.

For example, Acme Widgets sells their product with a website online. WeShipit Delivery Service provides shipping. If Acme publishes a shipping interface to their website, then WeShipit could become a shipping agent for Acme’s site. It is feasible, with managed code, for WeShipit’s shipping code and web interface code to plug right into the website for Acme without anybody at Acme lifting a finger, or even being aware of the addition to their site.

This kind of flexibility will create some exciting opportunities for the composition of software across the Internet. (Imagine an online game where the players can write software to define their characters and their characters’ items). However, it raises concerns of practical details like faulty or malicious components, performance, type compatibility and type safety. These are the reasons that a managed environment is necessary. However, the last thing software developers want is to suffer the performance-hit of an interpreted environment.

Remember that managed code is code written for (and with) the .NET Framework. Managed code is called managed because it runs under the constant supervision of an execution engine called the Common Language Runtime or CLR.

Figure 2‑1 Managed Code and the CLR

The CLR is similar to other existing execution engines such as the Java VM or Visual Basic. The CLR supplies memory management, type safety, code verifiability, thread management and security. However, the CLR also bears some important distinctions from previously existing environments.

First and foremost, managed code is never interpreted. This statement is so important that it is worth repeating. Unlike with other execution engines, code that runs under the supervision of the CLR runs natively in the machine language of the host CPU. I will touch on this in more detail shortly.

Secondly, the security model enforced by the CLR on managed code is different than the security of previous environments. Managed code does not run in a virtual machine, and it does not run in a sand-box. However, the Common Language Runtime does apply restrictions to managed code based on where the code comes from. Managed security is flexible and powerful feature. An entire tutorial in this series is devoted to this topic.

Before beginning to describe what and how the CLR does what it does, I would like to take a moment to address the need for managed code. Managed code makes it possible for applications to be composed of parts that they were never tested with, and that may not even have existed at the time of development.

For example, Acme Widgets sells their product with a website online. WeShipit Delivery Service provides shipping. If Acme publishes a shipping interface to their website, then WeShipit could become a shipping agent for Acme’s site. It is feasible, with managed code, for WeShipit’s shipping code and web interface code to plug right into the website for Acme without anybody at Acme lifting a finger, or even being aware of the addition to their site.

This kind of flexibility will create some exciting opportunities for the composition of software across the Internet. (Imagine an online game where the players can write software to define their characters and their characters’ items). However, it raises concerns of practical details like faulty or malicious components, performance, type compatibility and type safety. These are the reasons that a managed environment is necessary. However, the last thing software developers want is to suffer the performance-hit of an interpreted environment.

2.1 Intermediate Language, Metadata and JIT Compilation

2.1 Intermediate Language, Metadata and JIT Compilation

Managed code is not interpreted by the CLR. I mentioned that earlier. But how is it possible for native machine code to be verifiably type-safe, secure, and fault tolerant? The answer comes in threes: Common Intermediate Language, Metadata and JIT Compilation.

Common Intermediate Language (often called Intermediate Language or IL) is an abstracted assembly language. The designers of the .NET Framework worked with many professional and academic institutions (over the course of over five years) to define an assembly language for a CPU that doesn’t exist. One of the goals of IL, however, was to be completely CPU agnostic, to the extent that it will translate well for ANY CPU.

IL is high-level for an assembly language, and includes instructions for such advanced programming concepts as newing up an instance of an object or calling a virtual function. And when I said, “translate” in the previous paragraph, that’s exactly what I meant. The CLR translates these and every other IL instruction into a native machine language instruction at runtime, and then executes the code natively. This translation is called Just-In-Time Compilation or JIT Compiling.

So what about the third item? What about Metadata? The easiest way to understand metadata is to start with IL instructions. IL instructions describe the executable logic of your source code. They describe the many branches, loops, comparisons, etc. of software. The IL instructions embody the logic of your managed software. Metadata is all of the other stuff.

Metadata describes class definitions, method calls, parameter types and return values. Metadata describes binding rules for types found in external binary modules (called managed assemblies). Metadata literally describes every aspect of a program other than the literal executable logic.

In fact, a managed executable is nothing but IL and metadata. This is an important point. Traditional executables typically include the instructions of the program, but the definitions for things like classes and function calls are lost at compilation time. However, with managed executable files, the metadata and IL instructions always live together in the same file. A managed executable is IL and metadata.

Figure 2‑2 From Source Code to Managed Executable

This helps to complete the picture of managed code and JIT compilation. The JIT compiler, which is part of the CLR, uses both the metadata and the IL from a managed executable to create machine language instructions at runtime. Then, these machine language instructions are executed natively. However, in the process of JIT compiling the code some very important things happen. First, type-safety and security are verified. Second, code correctness is verified (no dangling memory references, or referencing unassigned data). Third, code executes at native speed. And fourth, processor independence comes along for the ride.

Figure 2‑3 From IL to Execution

Managed code and JIT compilation bring a lot to the equation. If a managed executable was ill-designed to break a security rule, the CLR will catch this at verification or JIT compilation time, and will refuse to execute the code. If a managed executable references unassigned data, or attempts to coerce a data-type into an incompatible type (through typecasting), the CLR will catch this and refuse to execute the code. And your code runs full speed on your hardware.

The CLR, through code management, will increase the functionality and robustness of your traditional console or GUI applications. However, for widely distributed applications that make use of components from many sources, the advantages of managed code are a necessity.

Managed code is not interpreted by the CLR. I mentioned that earlier. But how is it possible for native machine code to be verifiably type-safe, secure, and fault tolerant? The answer comes in threes: Common Intermediate Language, Metadata and JIT Compilation.

Common Intermediate Language (often called Intermediate Language or IL) is an abstracted assembly language. The designers of the .NET Framework worked with many professional and academic institutions (over the course of over five years) to define an assembly language for a CPU that doesn’t exist. One of the goals of IL, however, was to be completely CPU agnostic, to the extent that it will translate well for ANY CPU.

IL is high-level for an assembly language, and includes instructions for such advanced programming concepts as newing up an instance of an object or calling a virtual function. And when I said, “translate” in the previous paragraph, that’s exactly what I meant. The CLR translates these and every other IL instruction into a native machine language instruction at runtime, and then executes the code natively. This translation is called Just-In-Time Compilation or JIT Compiling.

So what about the third item? What about Metadata? The easiest way to understand metadata is to start with IL instructions. IL instructions describe the executable logic of your source code. They describe the many branches, loops, comparisons, etc. of software. The IL instructions embody the logic of your managed software. Metadata is all of the other stuff.

Metadata describes class definitions, method calls, parameter types and return values. Metadata describes binding rules for types found in external binary modules (called managed assemblies). Metadata literally describes every aspect of a program other than the literal executable logic.

In fact, a managed executable is nothing but IL and metadata. This is an important point. Traditional executables typically include the instructions of the program, but the definitions for things like classes and function calls are lost at compilation time. However, with managed executable files, the metadata and IL instructions always live together in the same file. A managed executable is IL and metadata.

Figure 2‑2 From Source Code to Managed Executable

This helps to complete the picture of managed code and JIT compilation. The JIT compiler, which is part of the CLR, uses both the metadata and the IL from a managed executable to create machine language instructions at runtime. Then, these machine language instructions are executed natively. However, in the process of JIT compiling the code some very important things happen. First, type-safety and security are verified. Second, code correctness is verified (no dangling memory references, or referencing unassigned data). Third, code executes at native speed. And fourth, processor independence comes along for the ride.

Figure 2‑3 From IL to Execution

Managed code and JIT compilation bring a lot to the equation. If a managed executable was ill-designed to break a security rule, the CLR will catch this at verification or JIT compilation time, and will refuse to execute the code. If a managed executable references unassigned data, or attempts to coerce a data-type into an incompatible type (through typecasting), the CLR will catch this and refuse to execute the code. And your code runs full speed on your hardware.

The CLR, through code management, will increase the functionality and robustness of your traditional console or GUI applications. However, for widely distributed applications that make use of components from many sources, the advantages of managed code are a necessity.

2.2 Automatic Memory Management

2.2 Automatic Memory Management

The Common Language Runtime does more for your C# and .NET managed executable than just JIT compile them. The CLR offers automatic thread management, security management, and perhaps most importantly, memory management.

Memory management is an unavoidable part of software development. Commonly memory management, to one degree or another, is implemented by the application. It is its sheer commonality combined with its potential complexity, however, that make memory management better suited as a system service.

Here are some simple things that can go wrong in software.

· Your code can reference a data block that has not been initialized. This can cause instability and cause erratic behavior in your software.

· Software may fail to free up a memory block after it is finished with the data. Memory leaks can cause an application or an entire system to fail.

· Software may reference a memory block after it has been freed up.

There may be other memory-management related bugs, but the great majority will fall under one of these main categories.

Developers are increasingly taxed with complex requirements, and the mundane task of managing the memory for objects and data types can be tedious. Furthermore, when executing component code from an un-trusted source (perhaps across the internet) in your same process with your main application code you want to be absolutely certain that the un-trusted code cannot obtain access to the memory for your data. These things create the necessity for automatic memory management for managed code.

All programs running under the .NET Framework or Common Language Runtime allocate memory from a managed heap. The managed heap is maintained by the CLR. It is used for all memory resources, including the space required to create instances of objects, as well as the memory required for data buffers, strings, collections, stacks and caches.

The managed heap knows when a block of data is referenced by your application (or by another object in the heap), in which case that object will be left alone. But as soon as a block of memory becomes an unreferenced item, it is subject to garbage collection. Garbage collection is an automatic part of the processing of the managed heap, and happens as needed.

Your code will never explicitly clean-up, delete, or free a block of memory, so therefore it is impossible to leak memory. Memory is considered garbage when it is no longer referenced by your code, so therefore it is impossible for your code to reference a block of memory that has already been freed or garbage collected. Finally, because the managed heap is a pointer-less environment (at least from your managed code’s point of view), it is possible for the code verifier to make it impossible for managed code to read a block of memory that has not been written to first.

The managed heap makes all three of the major memory management bugs an impossibility.

The Common Language Runtime does more for your C# and .NET managed executable than just JIT compile them. The CLR offers automatic thread management, security management, and perhaps most importantly, memory management.

Memory management is an unavoidable part of software development. Commonly memory management, to one degree or another, is implemented by the application. It is its sheer commonality combined with its potential complexity, however, that make memory management better suited as a system service.

Here are some simple things that can go wrong in software.

· Your code can reference a data block that has not been initialized. This can cause instability and cause erratic behavior in your software.

· Software may fail to free up a memory block after it is finished with the data. Memory leaks can cause an application or an entire system to fail.

· Software may reference a memory block after it has been freed up.

There may be other memory-management related bugs, but the great majority will fall under one of these main categories.

Developers are increasingly taxed with complex requirements, and the mundane task of managing the memory for objects and data types can be tedious. Furthermore, when executing component code from an un-trusted source (perhaps across the internet) in your same process with your main application code you want to be absolutely certain that the un-trusted code cannot obtain access to the memory for your data. These things create the necessity for automatic memory management for managed code.

All programs running under the .NET Framework or Common Language Runtime allocate memory from a managed heap. The managed heap is maintained by the CLR. It is used for all memory resources, including the space required to create instances of objects, as well as the memory required for data buffers, strings, collections, stacks and caches.

The managed heap knows when a block of data is referenced by your application (or by another object in the heap), in which case that object will be left alone. But as soon as a block of memory becomes an unreferenced item, it is subject to garbage collection. Garbage collection is an automatic part of the processing of the managed heap, and happens as needed.

Your code will never explicitly clean-up, delete, or free a block of memory, so therefore it is impossible to leak memory. Memory is considered garbage when it is no longer referenced by your code, so therefore it is impossible for your code to reference a block of memory that has already been freed or garbage collected. Finally, because the managed heap is a pointer-less environment (at least from your managed code’s point of view), it is possible for the code verifier to make it impossible for managed code to read a block of memory that has not been written to first.

The managed heap makes all three of the major memory management bugs an impossibility.

2.3 Language Concepts and the CLR

2.3 Language Concepts and the CLR

Managed code runs with the constant maintenance of the Common Language Runtime. The CLR provides memory management, type management, security and threading. In this respect, the CLR is a runtime environment. However, unlike typical runtime environments, managed code is not tied to any particular programming language.

You have most likely heard of C# (pronounced See-Sharp). C# is a new programming language built specifically to write managed software targeting the .NET Framework. However, C# is by no means the only language that you can use to write managed code. In fact, any compiler developer can choose to make their compiler generate managed code. The only requirement is that their compiler emits an executable comprised of valid IL and metadata.

At this time Microsoft is shipping five language compilers/assemblers with the .NET Framework. These are C#, Visual Basic, C++, Java Script, and IL. (Yes, you can write managed code directly in IL, however this will be as uncommon as it is to write assembly language programs today). In addition to the five languages shipping with the framework, Microsoft will release a Java compiler that generates managed applications that run on the CLR.

In addition to Microsoft’s language compilers, third parties are producing language compilers for over 20 computer languages, all targeting the .NET Framework. You will be able write managed applications in your favorite languages including Eiffel, PERL, COBOL and Java amongst others.

Language agnosticism is really cool. Your PERL scripts will now be able to take advantage of the same object libraries that you use in your C# applications. Meanwhile, your friends and coworkers will be able to use your reusable components whether or not they are using the same programming language as you. This division of runtime engine, API (Application Programmer Interface), and language syntax is a real win for developers.

The CLR does not need to know (nor will it ever know) anything about any computer language other than IL. All managed software is compiled down to IL instructions and metadata. These are the only things that the CLR deals with. The reason this is important is because it makes any computer language an equal citizen from the point of view of the CLR. By the time JIT compilation occurs your program is nothing but logic and metadata.

IL itself is geared towards object oriented languages. However, compilers for procedural or scripted languages can easily produce IL to represent their logic.

Managed code runs with the constant maintenance of the Common Language Runtime. The CLR provides memory management, type management, security and threading. In this respect, the CLR is a runtime environment. However, unlike typical runtime environments, managed code is not tied to any particular programming language.

You have most likely heard of C# (pronounced See-Sharp). C# is a new programming language built specifically to write managed software targeting the .NET Framework. However, C# is by no means the only language that you can use to write managed code. In fact, any compiler developer can choose to make their compiler generate managed code. The only requirement is that their compiler emits an executable comprised of valid IL and metadata.

At this time Microsoft is shipping five language compilers/assemblers with the .NET Framework. These are C#, Visual Basic, C++, Java Script, and IL. (Yes, you can write managed code directly in IL, however this will be as uncommon as it is to write assembly language programs today). In addition to the five languages shipping with the framework, Microsoft will release a Java compiler that generates managed applications that run on the CLR.

In addition to Microsoft’s language compilers, third parties are producing language compilers for over 20 computer languages, all targeting the .NET Framework. You will be able write managed applications in your favorite languages including Eiffel, PERL, COBOL and Java amongst others.

Language agnosticism is really cool. Your PERL scripts will now be able to take advantage of the same object libraries that you use in your C# applications. Meanwhile, your friends and coworkers will be able to use your reusable components whether or not they are using the same programming language as you. This division of runtime engine, API (Application Programmer Interface), and language syntax is a real win for developers.

The CLR does not need to know (nor will it ever know) anything about any computer language other than IL. All managed software is compiled down to IL instructions and metadata. These are the only things that the CLR deals with. The reason this is important is because it makes any computer language an equal citizen from the point of view of the CLR. By the time JIT compilation occurs your program is nothing but logic and metadata.

IL itself is geared towards object oriented languages. However, compilers for procedural or scripted languages can easily produce IL to represent their logic.

2.4 Advanced Topics for the Interested

2.4 Advanced Topics for the Interested

If you are one of those that just must know some of the details, then this section is for you. But, if you are looking for a practical but brief overview of the .NET Framework, you can skip to section Error! Reference source not found., Error! Reference source not found., right now and come back to this section when you have more time.

In specific, I am going to explain in more detail JIT compilation and garbage collection.

The first time that a managed executable references a class or type (such as a structure, interface, enumerated type or primitive type) the system must load the code module or managed module that implements the type. At the point of loading, the JIT compiler creates method stubs in native machine language for every member method in the newly loaded class. These stubs include nothing but a jump into a special function in the JIT compiler.

Once the stub functions are created, the system fixes up any method calls in the referencing code to point to the new stub functions. At this time no JIT compilation of the type’s code has occurred. However, if a managed application references a managed type, it is likely to call methods on this type (in fact it is almost inevitable).

When one of the stub functions is called, the JIT compiler looks up the source code (IL and metadata) in the associated managed module, and builds native machine code for the function on the fly. Then, it replaces the stub function with a jump to the newly JIT compiled function. The next time this same method is called in source code, it will be executed full speed without any need for compilation or any extra steps.

The good thing about this approach is that the system never wastes time JIT compiling methods that won’t be called by this run of your application.

Finally, when a method is JIT compiled, any types that it references are checked by the CLR to see if they are new to this run of the application. If this is indeed the first time a type has been referenced, then the whole process starts over again for this type. This is how JIT compilation progresses throughout the execution of a managed application.

Take a deep breath, and exhale slowly, because now I am going to switch gears and discuss the garbage collector.

Garbage collection is a process that takes time. The CLR must halt all or most of the threads in your managed application when garbage buffers and garbage objects are cleaned out of the managed heap. Performance is important, so it can help to understand the garbage collection process.

Garbage collection is not an active process. Garbage collection is passive and will only happen when there is not enough free memory to fulfill an instruction to new-up an instance of an object or memory buffer. If there is not enough free memory then a garbage collection occurs in the attempt to find enough free memory.

When garbage collection occurs, the system finds all objects referenced by local (stack) variables and global variables. These objects are not garbage, because they are referenced by your running threads. After this, the system searches referenced objects for more object references. These objects are also not garbage because they are referenced. This continues until the last referenced object is found. All other objects are garbage and are released.

Figure 2‑4 Managed Objects in the Managed Heap

During garbage collection, the memory consumed by garbage objects is compacted and referenced objects are moved to fill in the newly freed memory space. As a result, memory is used much more efficiently in managed applications, because memory fragmentation is impossible.

Although the garbage collection itself can be a time consuming process (while still usually less than a split second), memory allocation is a very speedy process. The reason for this is that memory is always allocated contiguously on the managed heap (similar to a stack allocation). So the great majority of memory allocations amount to nothing other than a pointer addition.

Of course there are many more details to JIT compilation and the managed heap, however these advanced facts might whet your appetite to look further into these topics in the future. (See Jeffrey Richter’s MSDN Article for more information on the garbage collector).

If you are one of those that just must know some of the details, then this section is for you. But, if you are looking for a practical but brief overview of the .NET Framework, you can skip to section Error! Reference source not found., Error! Reference source not found., right now and come back to this section when you have more time.

In specific, I am going to explain in more detail JIT compilation and garbage collection.

The first time that a managed executable references a class or type (such as a structure, interface, enumerated type or primitive type) the system must load the code module or managed module that implements the type. At the point of loading, the JIT compiler creates method stubs in native machine language for every member method in the newly loaded class. These stubs include nothing but a jump into a special function in the JIT compiler.

Once the stub functions are created, the system fixes up any method calls in the referencing code to point to the new stub functions. At this time no JIT compilation of the type’s code has occurred. However, if a managed application references a managed type, it is likely to call methods on this type (in fact it is almost inevitable).

When one of the stub functions is called, the JIT compiler looks up the source code (IL and metadata) in the associated managed module, and builds native machine code for the function on the fly. Then, it replaces the stub function with a jump to the newly JIT compiled function. The next time this same method is called in source code, it will be executed full speed without any need for compilation or any extra steps.

The good thing about this approach is that the system never wastes time JIT compiling methods that won’t be called by this run of your application.

Finally, when a method is JIT compiled, any types that it references are checked by the CLR to see if they are new to this run of the application. If this is indeed the first time a type has been referenced, then the whole process starts over again for this type. This is how JIT compilation progresses throughout the execution of a managed application.

Take a deep breath, and exhale slowly, because now I am going to switch gears and discuss the garbage collector.

Garbage collection is a process that takes time. The CLR must halt all or most of the threads in your managed application when garbage buffers and garbage objects are cleaned out of the managed heap. Performance is important, so it can help to understand the garbage collection process.

Garbage collection is not an active process. Garbage collection is passive and will only happen when there is not enough free memory to fulfill an instruction to new-up an instance of an object or memory buffer. If there is not enough free memory then a garbage collection occurs in the attempt to find enough free memory.

When garbage collection occurs, the system finds all objects referenced by local (stack) variables and global variables. These objects are not garbage, because they are referenced by your running threads. After this, the system searches referenced objects for more object references. These objects are also not garbage because they are referenced. This continues until the last referenced object is found. All other objects are garbage and are released.

Figure 2‑4 Managed Objects in the Managed Heap

During garbage collection, the memory consumed by garbage objects is compacted and referenced objects are moved to fill in the newly freed memory space. As a result, memory is used much more efficiently in managed applications, because memory fragmentation is impossible.

Although the garbage collection itself can be a time consuming process (while still usually less than a split second), memory allocation is a very speedy process. The reason for this is that memory is always allocated contiguously on the managed heap (similar to a stack allocation). So the great majority of memory allocations amount to nothing other than a pointer addition.

Of course there are many more details to JIT compilation and the managed heap, however these advanced facts might whet your appetite to look further into these topics in the future. (See Jeffrey Richter’s MSDN Article for more information on the garbage collector).

3 Visual Studio .NET

3 Visual Studio .NET

This section is a short one, but I cannot go on without mentioning Visual Studio .NET. Visual Studio .NET is not part of the .NET Framework. However, it deserves mention in an introduction of the .NET Framework. Visual Studio is an integrated development environment published by Microsoft for writing Windows programs. Visual Studio .NET can also be used to write managed applications in C#, C++, Visual Basic and any other language (such as Perl) that is integrated into the environment by a third-party.

Visual Studio .NET itself is a partially managed application and requires the .NET Framework to run. Visual Studio .NET is a very user-friendly and productive environment in which to write managed applications. It includes many helpful wizards for creating code, as well as useful features such as context coloring, integrated online help, auto completion and edit-time error notification. But, you do not need Visual Studio .NET to execute or develop managed software.

I am not suggesting that you avoid Visual Studio .NET. It is a great product. In fact a large portion of a later tutorial in this series is devoted to teaching you to get the most out of Visual Studio as a C# programmer. But, it is important that you recognize Visual Studio .NET and the .NET Framework as different products.

The Framework is the infrastructure for managed code. The .NET Framework includes the CLR as well as other components that I will be discussing shortly. The .NET Framework also ships with an SDK (Software Developers Kit) that includes command line compilers for C#, C++, Visual Basic, and IL.

The bottom line is that the Framework is all you need to develop C# applications. That being said Visual Studio .NET can increase your enjoyment and productivity significantly.

This section is a short one, but I cannot go on without mentioning Visual Studio .NET. Visual Studio .NET is not part of the .NET Framework. However, it deserves mention in an introduction of the .NET Framework. Visual Studio is an integrated development environment published by Microsoft for writing Windows programs. Visual Studio .NET can also be used to write managed applications in C#, C++, Visual Basic and any other language (such as Perl) that is integrated into the environment by a third-party.

Visual Studio .NET itself is a partially managed application and requires the .NET Framework to run. Visual Studio .NET is a very user-friendly and productive environment in which to write managed applications. It includes many helpful wizards for creating code, as well as useful features such as context coloring, integrated online help, auto completion and edit-time error notification. But, you do not need Visual Studio .NET to execute or develop managed software.

I am not suggesting that you avoid Visual Studio .NET. It is a great product. In fact a large portion of a later tutorial in this series is devoted to teaching you to get the most out of Visual Studio as a C# programmer. But, it is important that you recognize Visual Studio .NET and the .NET Framework as different products.

The Framework is the infrastructure for managed code. The .NET Framework includes the CLR as well as other components that I will be discussing shortly. The .NET Framework also ships with an SDK (Software Developers Kit) that includes command line compilers for C#, C++, Visual Basic, and IL.

The bottom line is that the Framework is all you need to develop C# applications. That being said Visual Studio .NET can increase your enjoyment and productivity significantly.

4 Reusable Components and the FCL

4 Reusable Components and the FCL

Up to this point I have spoken quite a bit about the goals of the .NET Framework, as well as what it means to write managed code and what the CLR does for your software. The Common Language Runtime is the foundation for everything managed, and as such is a very important piece of the .NET puzzle. But in your day to day programming you will spend much more energy discovering, utilizing, and extending the reusable components found in the Framework Class Library.

The Framework Class Library or FCL nothing short of a massive collection of classes, structures, enumerated types and interfaces defined and implemented for reuse in your managed software. The classes in the FCL are here to facilitate everything from file IO and data structure manipulation to manipulating windows and other GUI elements. The FCL also has advanced classes for creating web and distributed applications.

Before diving headlong into the FCL, I would like to take a little time to address code reuse in general.

Up to this point I have spoken quite a bit about the goals of the .NET Framework, as well as what it means to write managed code and what the CLR does for your software. The Common Language Runtime is the foundation for everything managed, and as such is a very important piece of the .NET puzzle. But in your day to day programming you will spend much more energy discovering, utilizing, and extending the reusable components found in the Framework Class Library.

The Framework Class Library or FCL nothing short of a massive collection of classes, structures, enumerated types and interfaces defined and implemented for reuse in your managed software. The classes in the FCL are here to facilitate everything from file IO and data structure manipulation to manipulating windows and other GUI elements. The FCL also has advanced classes for creating web and distributed applications.

Before diving headlong into the FCL, I would like to take a little time to address code reuse in general.

4.1 Object Oriented Code Reuse

4.1 Object Oriented Code Reuse

Code reuse has been a goal for computer scientist for decades now. Part of the promise of object oriented programming is flexible and advanced code reuse. The CLR is a platform designed from the ground up to be object oriented, and therefore to promote all of the goals of object oriented programming.

Today, most software is written nearly from scratch. The unique logic of most applications can usually be described in several brief statements, and yet most applications include many thousands or millions of lines of custom code to achieve their goals. This can not continue forever.

In the long run the software industry will simply have too much software to write to be writing every application from scratch. Therefore systematic code reuse is a necessity.

Rather than go into a lengthy explanation about why OO and code reuse are difficult-but-necessary, I would like to mention some of the rich features of the CLR that promote object oriented programming.

· The CLR is an object oriented platform from IL up. IL itself includes many instructions for dealing with memory and code as objects.

· The CLR promotes a homogeneous view of types, where every data type in the system, including primitive types, is an object derived from a base object type called System.Object. In this respect literally every data element in your program is an object and has certain consistent properties.

· Managed code has rich support for object oriented constructs such as interfaces, properties, enumerated types and of course classes. All of these code elements are collectively referred to as types when referring to managed code.

· Managed code introduces new object oriented constructs including custom attributes, advanced accessibility, and static constructors (which allow you to initialize types, rather than instances of types) to help fill in the places where other object oriented environments fall short.

· Managed code can make use of pre-built libraries of reusable components. These libraries of components are called managed Assemblies and are the basic building block of binary composeability. (Reusable components are packaged in files called assemblies, however technically even a managed executable is a managed assembly).

· Binary composeability allows your code to use other objects seamlessly without the necessity to have or compile source code from the third party code. (This is largely possible due to the rich descriptions of code maintained in the metadata).

· The CLR has very strong versioning ability. Even though your applications will be composed of many objects published in many different assemblies (files), it will not suffer from versioning problems as new versions of the various pieces are installed on a system. The CLR knows enough about an object to know exactly which version of an object is needed by a particular application.

These features and more build upon and extend previous object oriented platforms. In the long run object oriented platforms like the .NET Framework will change the way applications are built. Moving forward, a larger and larger percentage of the new code that you write will directly relate to the unique aspects of your application. Meanwhile, the standard bits that show up in many applications will be published as reusable and extendible types.

Code reuse has been a goal for computer scientist for decades now. Part of the promise of object oriented programming is flexible and advanced code reuse. The CLR is a platform designed from the ground up to be object oriented, and therefore to promote all of the goals of object oriented programming.

Today, most software is written nearly from scratch. The unique logic of most applications can usually be described in several brief statements, and yet most applications include many thousands or millions of lines of custom code to achieve their goals. This can not continue forever.

In the long run the software industry will simply have too much software to write to be writing every application from scratch. Therefore systematic code reuse is a necessity.

Rather than go into a lengthy explanation about why OO and code reuse are difficult-but-necessary, I would like to mention some of the rich features of the CLR that promote object oriented programming.

· The CLR is an object oriented platform from IL up. IL itself includes many instructions for dealing with memory and code as objects.

· The CLR promotes a homogeneous view of types, where every data type in the system, including primitive types, is an object derived from a base object type called System.Object. In this respect literally every data element in your program is an object and has certain consistent properties.

· Managed code has rich support for object oriented constructs such as interfaces, properties, enumerated types and of course classes. All of these code elements are collectively referred to as types when referring to managed code.

· Managed code introduces new object oriented constructs including custom attributes, advanced accessibility, and static constructors (which allow you to initialize types, rather than instances of types) to help fill in the places where other object oriented environments fall short.

· Managed code can make use of pre-built libraries of reusable components. These libraries of components are called managed Assemblies and are the basic building block of binary composeability. (Reusable components are packaged in files called assemblies, however technically even a managed executable is a managed assembly).

· Binary composeability allows your code to use other objects seamlessly without the necessity to have or compile source code from the third party code. (This is largely possible due to the rich descriptions of code maintained in the metadata).

· The CLR has very strong versioning ability. Even though your applications will be composed of many objects published in many different assemblies (files), it will not suffer from versioning problems as new versions of the various pieces are installed on a system. The CLR knows enough about an object to know exactly which version of an object is needed by a particular application.

These features and more build upon and extend previous object oriented platforms. In the long run object oriented platforms like the .NET Framework will change the way applications are built. Moving forward, a larger and larger percentage of the new code that you write will directly relate to the unique aspects of your application. Meanwhile, the standard bits that show up in many applications will be published as reusable and extendible types.

4.2 The Framework Class Library

4.2 The Framework Class Library

Now that you have a taste of the goals and groundwork laid by the CLR and managed code, let’s taste the fruits that it bears. The Framework Class Library is the first step toward the end solution of component based applications. If you like, you can use it like any other library or API. That is to say that you can write applications that make use of the objects in the FCL to read files, display windows, and do various tasks. But, to exploit the true possibilities, you can extend the FCL towards your applications needs, and then write a very thin layer that is just “application code”. The rest is reusable types and extensions of reusable types.

The FCL is a class library; however it has been designed for extendibility and composeability. This is advanced reuse.

Take, for example, the stream classes in the FCL. The designers of the FCL could have defined file streams and network streams and been done with it. Instead, all stream classes are derived from a base class, called System.IO.Stream. The FCL defines two main kinds of streams: Streams that communicate with devices (such as files, networks and memory), and streams whose devices are other instances of stream derived classes. These abstracted streams can be used for IO formatting, buffering, encryption, data compression, Base-64 encoding, or just about any other kind of data manipulation.

The result of this kind of design is a simple set of classes with a simple set of rules that can be combined in a nearly infinite number of ways to produce the desired affect. Meanwhile, you can derive your own stream classes which can be composed along with the classes that ship with the Framework Class Library. The following sample applications demonstrate streams and FCL composeability in general.

using System;

using System.IO;

class App{

public static void Main(String[] args){

try{

Stream fromStream =

new FileStream(args[0], FileMode.Open, FileAccess.Read);

Stream toStream =

new FileStream(args[1], FileMode.Create, FileAccess.Write);

Byte[] buffer = new Byte[fromStream.Length];

fromStream.Read(buffer, 0, buffer.Length);

toStream.Write(buffer, 0, buffer.Length);

}catch{

Console.WriteLine("Usage: FileToFile [FromFile] [ToFile]");

}

}

}

Figure 4‑1 FileToFile.cs

The code in Figure 4‑1 demonstrates a very simple file copy application. In brief, this application attempts to open a file, read every byte of the file into memory, and then write every byte in memory back out to a new file. If at any point anything fails, the application just prints the usage string for the application (arguably not the best error recovery scheme, but good for an example).

Now look at the following code which includes some minor modifications (marked in red) to the code in Figure 4‑1.

using System;

using System.IO;

using System.Security.Cryptography;

class App{

public static void Main(String[] args){

try{

Stream fromStream =

new FileStream(args[0], FileMode.Open, FileAccess.Read);

Stream toStream =

new FileStream(args[1], FileMode.Create, FileAccess.Write);

Byte[] buffer = new Byte[fromStream.Length];

toStream = new CryptoStream(toStream, new ToBase64Transform(),

CryptoStreamMode.Write);

fromStream.Read(buffer, 0, buffer.Length);

toStream.Write(buffer, 0, buffer.Length);

}catch{

Console.WriteLine("Usage: FileToBase64 [FromFile] [ToFile]");

}

}

}

Figure 4‑2 FileToBase64.cs

The only significant modification to the source code in the previous example is the italicized line of code in Figure 4‑2. This line news-up instances of the CryptoStream (one of the composeable stream classes I have been talking about), and an instance of a helper class called ToBase64Transform. Together these classes turn our toStream variable into a base-64 encoding machine. So now a simple file copy program has become a program with significantly more complex functionality. It will base-64 encode a file and save the results to a second file.

Note: Base-64 encoding is a standard method of data conversion where any binary data is represented as a text-blob consisting only of characters that exist in the printable ASCII character set. Base-64 encoding and decoding is useful for transferring data over the internet and through firewalls, etc.

Here is how the code reuse goal is achieved in this example. Though the details of the FileToBase64 and FileToFile applications are significantly different, the basic idea of both of these applications is largely the same. They both copy data from one file to another. So we achieve our software design goals by making the source code for the two applications 90% the same, even though a great deal of difference lies in the reusable objects selected.

Many classes in the FCL promote this kind of programming, and so should the reusable component classes that you write. To reach this end you must be comfortable with the FCL in general.

Now that you have a taste of the goals and groundwork laid by the CLR and managed code, let’s taste the fruits that it bears. The Framework Class Library is the first step toward the end solution of component based applications. If you like, you can use it like any other library or API. That is to say that you can write applications that make use of the objects in the FCL to read files, display windows, and do various tasks. But, to exploit the true possibilities, you can extend the FCL towards your applications needs, and then write a very thin layer that is just “application code”. The rest is reusable types and extensions of reusable types.

The FCL is a class library; however it has been designed for extendibility and composeability. This is advanced reuse.

Take, for example, the stream classes in the FCL. The designers of the FCL could have defined file streams and network streams and been done with it. Instead, all stream classes are derived from a base class, called System.IO.Stream. The FCL defines two main kinds of streams: Streams that communicate with devices (such as files, networks and memory), and streams whose devices are other instances of stream derived classes. These abstracted streams can be used for IO formatting, buffering, encryption, data compression, Base-64 encoding, or just about any other kind of data manipulation.

The result of this kind of design is a simple set of classes with a simple set of rules that can be combined in a nearly infinite number of ways to produce the desired affect. Meanwhile, you can derive your own stream classes which can be composed along with the classes that ship with the Framework Class Library. The following sample applications demonstrate streams and FCL composeability in general.

using System;

using System.IO;

class App{

public static void Main(String[] args){

try{

Stream fromStream =

new FileStream(args[0], FileMode.Open, FileAccess.Read);

Stream toStream =

new FileStream(args[1], FileMode.Create, FileAccess.Write);

Byte[] buffer = new Byte[fromStream.Length];

fromStream.Read(buffer, 0, buffer.Length);

toStream.Write(buffer, 0, buffer.Length);

}catch{

Console.WriteLine("Usage: FileToFile [FromFile] [ToFile]");

}

}

}

Figure 4‑1 FileToFile.cs

The code in Figure 4‑1 demonstrates a very simple file copy application. In brief, this application attempts to open a file, read every byte of the file into memory, and then write every byte in memory back out to a new file. If at any point anything fails, the application just prints the usage string for the application (arguably not the best error recovery scheme, but good for an example).

Now look at the following code which includes some minor modifications (marked in red) to the code in Figure 4‑1.

using System;

using System.IO;

using System.Security.Cryptography;

class App{

public static void Main(String[] args){

try{

Stream fromStream =

new FileStream(args[0], FileMode.Open, FileAccess.Read);

Stream toStream =

new FileStream(args[1], FileMode.Create, FileAccess.Write);

Byte[] buffer = new Byte[fromStream.Length];

toStream = new CryptoStream(toStream, new ToBase64Transform(),

CryptoStreamMode.Write);

fromStream.Read(buffer, 0, buffer.Length);

toStream.Write(buffer, 0, buffer.Length);

}catch{

Console.WriteLine("Usage: FileToBase64 [FromFile] [ToFile]");

}

}

}

Figure 4‑2 FileToBase64.cs

The only significant modification to the source code in the previous example is the italicized line of code in Figure 4‑2. This line news-up instances of the CryptoStream (one of the composeable stream classes I have been talking about), and an instance of a helper class called ToBase64Transform. Together these classes turn our toStream variable into a base-64 encoding machine. So now a simple file copy program has become a program with significantly more complex functionality. It will base-64 encode a file and save the results to a second file.

Note: Base-64 encoding is a standard method of data conversion where any binary data is represented as a text-blob consisting only of characters that exist in the printable ASCII character set. Base-64 encoding and decoding is useful for transferring data over the internet and through firewalls, etc.

Here is how the code reuse goal is achieved in this example. Though the details of the FileToBase64 and FileToFile applications are significantly different, the basic idea of both of these applications is largely the same. They both copy data from one file to another. So we achieve our software design goals by making the source code for the two applications 90% the same, even though a great deal of difference lies in the reusable objects selected.

Many classes in the FCL promote this kind of programming, and so should the reusable component classes that you write. To reach this end you must be comfortable with the FCL in general.

4.3 Using the FCL

4.3 Using the FCL

“Give a man a fish and you feed him for a day. Teach a man to fish

and you feed him for a lifetime.” The IO stream example in the last section was like me giving you a fish. With the FCL it is much better to learn how to fish, because the Framework Class Library is so expansive and you will use it every time you write managed code. I am going to give you the necessary information for you to learn to learn about the Framework Class Library.

The following is a list of points about the FCL that will help you to learn to use the library.

· The many classes, interfaces, structures and enumerated types in the Framework Class Library are collectively referred to as types.

· The various types in the framework are arranged in a hierarchy of namespaces. This solves the problem of name collisions. But in day-to-day use namespace help programmers to find types that solve a certain kind of problem, and they can help programmers to find more than one type that deal with the same problem (such as IO types that live in the System.IO namespace).

· Namespaces themselves live in a hierarchy and are arranged as words separated by the period “.” character. From the CLR’s point of view a type’s name is its fully qualified name including namespaces. Therefore we may write code that uses a Stream class or a Form class, but in IL these types are represented as System.IO.Stream and System.Windows.Forms.Form respectively.

· Languages such as C# allow you to indicate which namespaces a specific source code file will be using. This way in the source code you can refer to the types in their abbreviated form. The first red source code line in Figure 4‑2 is an example of the using statement in C#. I added this line to the code to indicate to the compiler that the sources included types found in the System.Security.Cryptography namespace of the FCL. In this example these types were the CryptoStream, ToBase64Transform and CryptoStreamMode types.

· The System namespace is a good place to look for types that are useful across a wide number of different types of applications.

· All types must have a base class (including types that you define in your). The exception to this rule is the System.Object type which is the base type for all types in the system. (If you create a class that does not explicitly declare a base class then the compiler implicitly defines its base class to be Object).

· The facts in this list plus comfort with the .NET Framework SDK Documentation will really bootstrap your skills with managed code.

“Give a man a fish and you feed him for a day. Teach a man to fish

and you feed him for a lifetime.” The IO stream example in the last section was like me giving you a fish. With the FCL it is much better to learn how to fish, because the Framework Class Library is so expansive and you will use it every time you write managed code. I am going to give you the necessary information for you to learn to learn about the Framework Class Library.

The following is a list of points about the FCL that will help you to learn to use the library.

· The many classes, interfaces, structures and enumerated types in the Framework Class Library are collectively referred to as types.

· The various types in the framework are arranged in a hierarchy of namespaces. This solves the problem of name collisions. But in day-to-day use namespace help programmers to find types that solve a certain kind of problem, and they can help programmers to find more than one type that deal with the same problem (such as IO types that live in the System.IO namespace).

· Namespaces themselves live in a hierarchy and are arranged as words separated by the period “.” character. From the CLR’s point of view a type’s name is its fully qualified name including namespaces. Therefore we may write code that uses a Stream class or a Form class, but in IL these types are represented as System.IO.Stream and System.Windows.Forms.Form respectively.

· Languages such as C# allow you to indicate which namespaces a specific source code file will be using. This way in the source code you can refer to the types in their abbreviated form. The first red source code line in Figure 4‑2 is an example of the using statement in C#. I added this line to the code to indicate to the compiler that the sources included types found in the System.Security.Cryptography namespace of the FCL. In this example these types were the CryptoStream, ToBase64Transform and CryptoStreamMode types.

· The System namespace is a good place to look for types that are useful across a wide number of different types of applications.

· All types must have a base class (including types that you define in your). The exception to this rule is the System.Object type which is the base type for all types in the system. (If you create a class that does not explicitly declare a base class then the compiler implicitly defines its base class to be Object).

· The facts in this list plus comfort with the .NET Framework SDK Documentation will really bootstrap your skills with managed code.

4.4 The .NET Framework SDK Documentation

4.4 The .NET Framework SDK Documentation

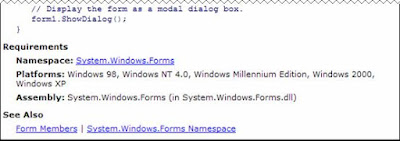

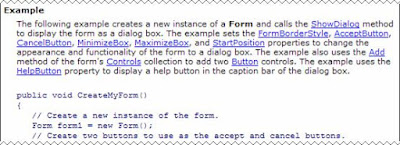

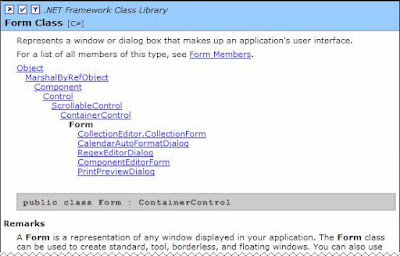

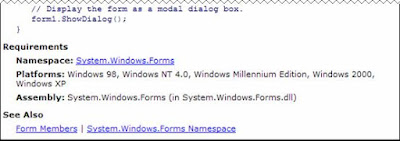

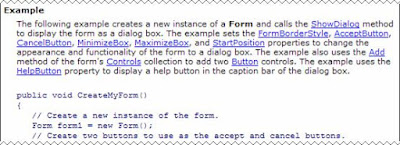

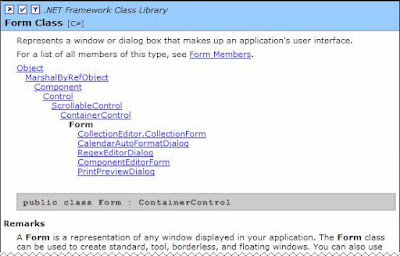

When you install Visual Studio .NET or the .NET Framework SDK you should make a point of installing the full documentation for the SDK. This is important. The SDK Documentation includes a wealth of great information. In fact, there is so much information in the docs that it can be overwhelming, so I am going to point out a limited set of topics that you should read.

The first topic in the table of contents for the .NET Framework SDK docs is called Getting Started with the .NET Framework->Overview of the .NET Framework. It is a short read and you should read it first. It is not nearly as detailed as this tutorial, but it will get you started with the SDK docs, and point you in the direction of other interesting topics.